Companies throw away millions of pounds every day — not because they lack good ideas but because they commit too soon.

In my last post, The Success Delusion: Why Teams Stop Thinking Once They Ship, I explored how teams mistake shipping for success — ignoring whether what they built actually works.

But there’s an even bigger problem before that — how teams decide what to build in the first place.

Some teams believe that speed equals smart. They lock in ideas, fund initiatives, and confidently march toward delivery.

Others take a different approach. They assume most ideas sound good in theory but don’t hold up under testing, comparison, and validation — experience has taught them that innovative ideas can quickly become no more than expensive gambles.

One of these teams pays a high Success Tax. The other barely pays at all.

Deciders vs. Seekers

There are two types of product teams: those who commit too soon and those who validate first. I call them Deciders and Seekers.

Deciders treat every idea like a precious metal. They obsess over crafting the perfect roadmap, writing beautiful strategy docs, and getting buy-in for their “game-changing” gold-plated initiatives. They fall in love with their own genius. Before you know it, the idea is locked in — funded, staffed, and death-marching toward delivery.

Seekers, on the other hand, treat every idea like a bet. They resist the urge to go big or commit too soon. Instead, they test, compare, and validate before making expensive bets. They know the faster they kill bad ideas, the more time they have to invest in great ones

Guess which team pays the highest Success Tax?

It’s always the Deciders.

Everyone pays some tax — it’s just that it’s a variable-rate tax. Seekers minimise their tax exposure by testing before committing. Deciders, conversely, rack up massive bills — wasting months (or years) on ideas they should have quit long ago.

The Power of Quitting (at the Right Time)

Why does this happen?

Deciders think quitting makes them a failure. Seekers know quitting is a skill.

In Quit, Annie Duke (so much gold in her writing) makes a brilliant point: “People think quitting is the opposite of grit, but that’s not true. Grit isn’t about blindly persisting — it’s about knowing when to push forward and when to walk away.”

And here’s the painful truth:

“When we finally quit, we realise we should have done it long ago.” — Annie Duke.

When a team finally admits an idea isn’t working, they don’t just regret the decision — they regret how long it took to make it. Looking back, they see the wasted months, the sunk costs, the red flags they ignored.

The Success Tax is the price of refusing to quit when you should.

But Seekers don’t quit willy-nilly — they quit intelligently — before the costs mount up. They quit the wrong ideas early before they become expensive failures. They quit certainty and replace it with learning.

Deciders? They cling to ideas because they’ve already committed. They equate quitting with losing, even when the actual loss is sticking with the wrong thing for too long.

Why Is It That Some Teams Keep Paying More Success Tax? (Common Rationalisations)

No one gets a choice about paying the Success Tax. The tax is always there. The only difference is how much you pay and when.

I haven’t encountered a team that decides to pay more — they rationalise their way into it. They tell themselves stories that sound smart but are really just excuses.

Let’s explore the most common lies teams tell themselves before writing a massive cheque to the Success Tax collector.

1. “We already know what the customer wants.” (The Expertise Fallacy)

- A stakeholder swears, “We’ve talked to customers — they are never done asking for this.”

- A PM is convinced, “We’ve seen this before. Trust me.”

- The whole team assumes, “We wouldn’t all be here discussing this if it wasn’t a good idea.”

Reality Check: You think you know. But markets shift. Users behave unpredictably. And even if the idea itself is good, execution still matters.

How to Expose It: Ask

- “What evidence do we have beyond gut feeling?”

- “What would convince us we’re wrong?”

If no one has a clear answer, you’re already in trouble.

2. “We don’t have time to test — we need to move fast.” (The Speed Trap)

- Leadership demands urgency.

- Competitors are launching new features.

- Engineers are waiting for the specs.

So, the team skips validation. They jump straight to building the solution.

Reality Check: Moving fast in the wrong direction isn’t progress — it’s waste. Testing doesn’t slow you down. It prevents you from wasting time on bad ideas.

How to Expose It: Ask:

- “What’s the fastest way to learn if this idea is worth pursuing?”

- “What’s the cost if we build the wrong thing?”

Good teams realise the fastest path is the one that avoids unnecessary work.

3. “The CEO/stakeholder wants this.” (The HiPPO Problem)

(HiPPO = Highest Paid Person’s Opinion)

- A big-name exec walks into the room and says, “I want this built.” The team panics. Someone whispers, “We can’t say no to the CEO.” And just like that, testing goes out the window.

Reality Check: Stakeholders want outcomes, not just ideas; the best ones also respect evidence. Suppose an exec’s idea is good, great! But if it’s wrong, wouldn’t they rather know before wasting time, effort and resources, and that’s before we even talk about opportunity cost?

How to Expose It: Instead of arguing, frame testing as risk reduction and strategic alignment:

- “We love that idea. Let’s run a quick test to ensure it works before committing big.”

- “What would success look like? How will we know this is the right bet?”

- “This will also help us map it back to our strategy and the outcomes we’re already working toward.”

Make it about helping the stakeholder, not resisting them.

4. “It worked for [big company].” (The False Analogy Fallacy)

- “Amazon did this, and look where they are now!”

- “This worked for a startup I worked at before.”

- “Google’s product team runs like this, and they’re Google.”

Reality Check: What worked for them might not work for you. You have different users, different markets, and different timing.

How to Expose It: Ask:

- “What was different about their context vs. ours?”

- “What assumptions did they rely on? Do those apply to us?”

Mindlessly copying another company’s playbook isn’t a strategy — it’s wishful thinking.

5. “We’ll test after we launch.” (The Sunk Cost Trap)

- “We’ll just get this out the door and see what happens.”

- “If it flops, we’ll iterate.”

Reality Check: Learning is most expensive after launch. By then, teams have sunk costs, internal political pressure, and inertia working against them. Instead of pivoting, they double down.

How to Expose It:

- “What happens if this doesn’t work?” (Watch for woolly answers.)

- “How hard would it be to reverse this decision later?” (Type 1 or Type 2 decision?)

If the team gets defensive, that’s a sign they’re already emotionally attached to the idea.

6. “But we’ve run the ROI numbers, and the idea is Worth It.” (The Illusion of Certainty)

- “We crunched the numbers — if this works, we’ll generate £X in revenue.”

- “The business case makes this a no-brainer — finance is convinced!”

- “If we don’t do this, we’re leaving money on the table.”

Reality Check: ROI calculations are only as good as their assumptions. And assumptions without validation? That’s just wishful thinking in spreadsheet form.

This is where EV thinking is powerful — instead of asking what we stand to gain, we should weigh the likelihood of success. I wrote about this previously in a post — ‘The Product Manager’s Guide to Playing the Odds: Making Smart Bets in an Uncertain World.’

How to Expose It:

- “What’s the probability that we’ll achieve this ROI?”

- “What assumptions need to be true for this ROI calculation to hold?”

- “What’s the downside risk if we’re wrong?”

Pro move: Instead of just showing one ROI estimate, great teams use Expected Value (EV):

EV = (probability of success × expected gain) — (probability of failure × expected loss).

If you don’t know these probabilities? That’s the tax you’re paying for skipping validation.

7. “Our Competitor Has This — We Need It Too.” (The Survivorship Bias Fallacy)

- “We can’t afford to fall behind — Competitor X has already launched this.”

- “If they’re investing in it, it must be working.”

- “We need feature parity.”

Reality Check: Just because a competitor has something doesn’t mean it’s working. They might be keeping it alive due to sunk costs, internal politics, or a long-term bet that hasn’t paid off (and may never). There is a flip side here (sometimes): some features are just table stakes. If every competitor has it and customers now expect it, not having it could be a competitive disadvantage.

How to Expose It:

- “Is this truly differentiating, or just table stakes?”

- “Do our customers expect this, or are we assuming they do?”

- “Would we still build this if our competitors didn’t have it?”

Pro move: Instead of copying, focus on what uniquely differentiates you. If it’s table stakes, find the fastest, leanest way to deliver it while still investing in areas where you can truly stand out.

How Do You Avoid These Bear Traps?

The first step is realising that these rationalisations aren’t logical. They’re either fear or false certainty.

- Fear of slowing down.

- Fear of being wrong.

- Fear of pushing back on a stakeholder.

- False certainty that their ROI math is airtight.

- False certainty that copying a competitor is automatically the right move.

Seekers face these fears by testing before committing. They challenge their own certainty, validate assumptions, and recognise when something is table stakes vs. a differentiator.

Deciders ignore these fears — or worse, disguise them as confidence. That’s how they end up paying the highest Success Tax.

The Mindset Shift: From Idea Worship to Evidence Seeking

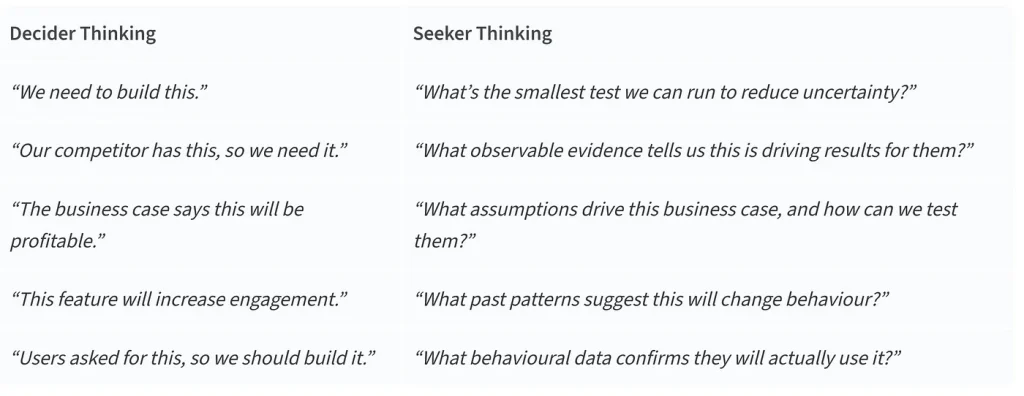

If there’s one thing Deciders and Seekers fundamentally disagree on, it’s this:

- Deciders think their job is to pick the best idea — they treat decisions as binary choices — should we build this or not?

- Seekers think their job is to discover the best idea — they treat decisions as comparisons — what’s the best way to solve this problem?

The difference is subtle but massive. Deciders think in certainties. Seekers believe in probabilities.

The good news? Mindset isn’t fixed. Deciders can become Seekers. But first, they must let go of the biggest myth in decision-making…

The Myth of the One Right Idea

Deciders tend to fixate on one solution at a time. They believe their job is to choose the right idea and then execute it flawlessly.

This is a trap.

In reality, no idea is guaranteed to be correct. Every idea is a bet, and the best teams don’t just ask, “Is this a good idea?” — they ask:

- “What needs to be true for this to work?”

- “How does this compare to other solutions?”

- “What’s the smallest thing we can do to test it?”

From Binary Decisions to Compare & Contrast Thinking

This is where Teresa Torres’ Compare & Contrast Thinking changes the game. Instead of defaulting to “Should we do this or not?”, great teams ask:

- What alternative solutions exist? If we only test one idea, we’re not testing — we’re just hoping.

- What trade-offs are we making? Every decision has opportunity costs. If we invest in this, what are we not doing?

- What does success look like? (And what does failure look like?) How will we know this is working?

- What are our quitting criteria? What signals would tell us to pivot or kill this idea before we sink too much into it?

From Faith-Based Decisions to Evidence-Based Decisions

This shift — from picking to discovering — changes everything. But how do you shift from Decider mode to Seeker mode? The key is to replace assumptions with evidence. Here’s how:

The Bottom Line: Seekers pay less success tax because they test, compare, and validate — comparatively, the amount they pay is tiny, and they avoid the huge bill later.

So, stop worshipping ideas if you want to avoid sinking months into a doomed idea. Start testing them. Remember — it’s not about choosing the best idea — it’s about discovering it.

The Practices That Expose Logical Fallacies & Create Clarity

Mindset is crucial, but sadly, not enough on its own. Seekers don’t just think differently — they work differently. They use structured practices to expose assumptions, surface risks, and create clarity before committing to bigger bets.

Here’s how they do it:

1. Assumption Mapping: Identifying What Needs to Be True

- Before testing anything, Seekers first ask: What assumptions must hold for this idea to succeed?

How It Works:

- List out every assumption the idea depends on

- Rank them by Impact (how critical is this to success) and Certainty (how much do we know). I use the Certainty Meter (by Itamar Gilad) to qualify confidence.

- Start by testing the highest-impact, lowest-certainty assumptions first.

Why It Works:

- It forces teams to confront where their confidence comes from.

- It prevents overconfidence based on weak signals (e.g., “our competitor has it” or “our exec loves it”).

- It ensures that validation focuses on the most significant risks first.

Red Flag (Decider Thinking):

- Teams claiming they “already know” an assumption is valid but can’t back it up with high-confidence evidence.

2. Fake Door Tests: Validating Demand Before Building

- Seekers don’t just ask customers what they want — they test whether people will act.

How It Works:

- Put up a sign-up page, CTA, or purchase button for a feature that doesn’t exist yet.

- Track how many people click or engage before you build anything.

- No engagement? No demand. Move on.

Why It Works:

- It removes opinion-based decision-making — you get real behavioural data.

- It saves months of effort on features customers say they want but won’t use.

Red Flag (Decider Thinking):

- Teams saying, “We can’t test it because it’s too early.” If it’s too early to test, it’s too early to build.

3. Quitting Criteria: Knowing When to Walk Away

Seekers set quitting criteria before starting so they don’t fall into the sunk cost trap.

How It Works:

- Before launching an initiative, define what success and failure look like.

- How much time/money/effort are you willing to spend before killing it.

- If the quitting criteria are met, you walk away — no debate.

Why It Works:

- It removes emotion from decision-making — you don’t get attached to failing ideas.

- It forces teams to stop bad bets early before they spiral into full-blown disasters.

Red Flag (Decider Thinking):

- Teams that never define success or failure lead to endless iterations of things that aren’t working.

4. Behavioural Data Over Surveys: Watching What People Do, Not What They Say

- Customers say one thing but do another. Seekers know that real behaviour beats self-reported data.

How It Works:

Instead of relying on customer interviews alone, track actual behaviour:

- Do users engage with similar features today?

- Have they hacked together workarounds?

- Have past user requests translated into actual adoption?

- Compare what people say vs. what they do.

Why It Works:

- It catches false positives — ideas that sound good in interviews but don’t drive actual behaviour.

- It helps teams prioritise what matters.

Red Flag (Decider Thinking):

- Building something just because users asked for it, without checking whether similar past requests led to actual adoption.

5. Smallest Viable Test: Testing the Idea Before Scaling

- Seekers don’t launch big. They start small, test fast, and scale based on evidence.

How It Works:

- Create a lightweight version that delivers the core value instead of a complete build.

- Paper Prototype: Show a sketch or wireframe instead of building software.

- Concierge MVP: Manually deliver the service before automating.

- Wizard of Oz MVP: Fake the back end to see if people use the front end.

- Track engagement and only invest in scaling if there’s traction.

Why It Works:

- It prevents wasting months on features nobody wants.

- It allows iterative learning instead of betting everything on a big launch.

Red Flag (Decider Thinking):

- Treating learning prototypes as half-built products instead of experiments to test demand.

The Bottom Line: Seekers Reduce Their Success Tax

Seekers don’t trust gut feelings, roadmaps, or business cases alone. They use structured, evidence-based practices to minimise risk, validate assumptions, and course-correct early.

Deciders? They ignore these steps, assuming they’re right while racking up a huge Success Tax bill.

Conclusion — Are You Ready To Make The Switch?

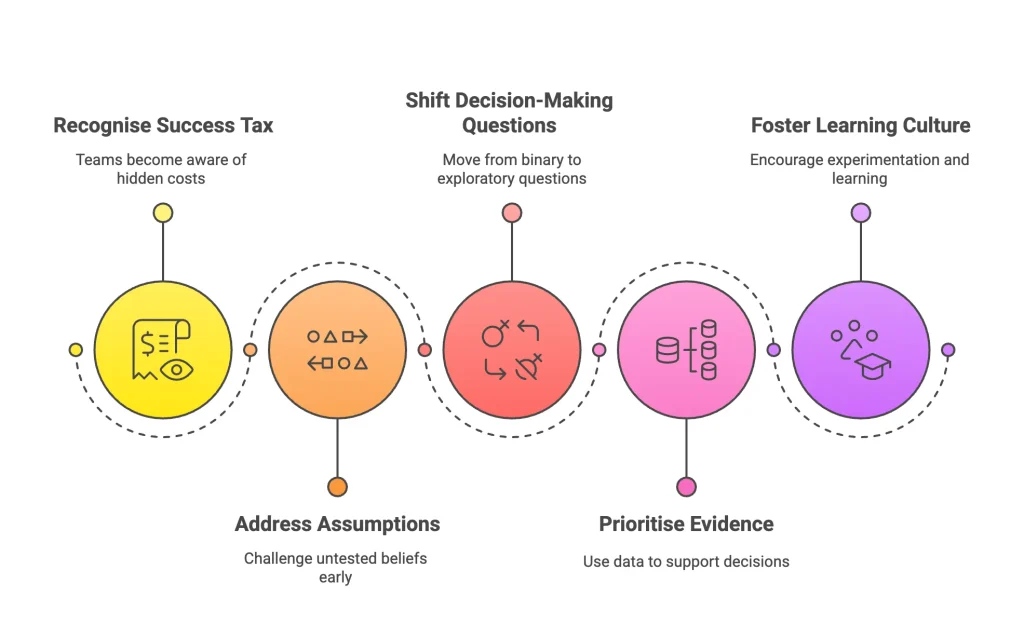

Making the switch from Decider to Seeker isn’t about adding more processes; it’s about rewiring how your team approaches making decisions — from assumption to evidence, from faith to data, from guessing to testing.

Here’s how to make it real:

1. Make the Success Tax Visible

Teams pay the highest Success Tax when they don’t realise they’re paying it. To break the cycle:

- Call out assumptions early — don’t let untested beliefs masquerade as facts.

- Track wasted effort — how many things have you built that didn’t get used?

- Normalise quitting — celebrate learning as much as launching.

Decider Red Flag: Teams still using “we just know” or “this is how we’ve always done it” as justification.

2. Stop Asking ‘Will We/Won’t We?’ — Start Asking ‘How Will We Know?’

Instead of defaulting to binary decisions (Should we build this? Should we invest in that?), shift to:

- What needs to be true for this to succeed?

- How will we measure if we’re right?

- What would tell us we should quit?

Decider Red Flag: Roadmaps filled with commitments instead of hypotheses.

3. Elevate Evidence Over Opinion

Seekers challenge not just bad ideas but all ideas until the data supports them. Make it a habit to ask:

- Where does our confidence come from? (Use the Certainty Meter!)

- What are the trade-offs and opportunity costs?

- What’s the smallest test we can run before scaling?

Decider Red Flag: Making big bets without clear, high-confidence evidence.

4. Build a Culture That Rewards Learning, Not Just Shipping

Fast doesn’t mean launching faster. It means learning faster.

- The best teams quickly kill bad ideas to double down on great ones.

- The real goal isn’t to launch — it’s to drive impact.

- The more your team questions, tests, and adapts, the smaller your Success Tax bill will be.

What about you? Have you seen teams overpay the Success Tax? What’s the most common rationalisation you’ve heard for skipping validation?

Send me your comments — I’d love to hear your take.